Last week we sat down for a user interview with a UX researcher of a French unicorn to discuss how she uses tags to flag pieces of user feedback and interviews to highlight interesting and relevant requests or frustrations and to later identify common patterns among all the data.

The entire conversation could be summarized as:

Manually Tagging User Feedback just sucks!

It is not the first time that we have heard this. Manually tagging user feedback presents a multitude of problems and frustrations that are shared by multiple members of Product and CX teams.

Specifically, she told us that manually tagging user feedback presents the following problems:

- It takes time

As with every manual activity, tagging feedback it’s time-consuming. You need to read hundreds or thousands of feedback one by one, find the relevant piece of information, find the right tag and apply it

- Tagging feedback is frustrating because it doesn’t immediately show you the value of it

Tagging feedback one by one doesn't immediately give you an idea of the value you can get out of it and makes you feel like you are wasting time (we did it many times, and we feel for you)

- It’s almost always biased by the convictions and the laziness of the person who does it

When you have to analyze high volumes of user feedback it’s almost impossible to have an impartial overview of what they contain. Everyone in Product, Customer Support, Customer Success, and UX research has their priorities and convictions. When you have these biases you are always seduced to highlight the topics you care about and you might miss important information.

- In most cases, tagging feedback is done inaccurately because no one wants to spend time finding the right tag from a huge list

Having to choose from an endless list of tags, makes you lazy, and superficial in picking the right ones.

- It’s inconsistent across multiple teams because everyone uses different taxonomies

The taxonomy used to tag feedback is an underestimated topic and in most cases, it varies across multiple teams and countries of the same organization. These inconsistencies prevent feedback from being tagged properly

- Tags can be too broad and in-actionable

If a taxonomy is not defined with attention it might include tags that are simply too broad, making them completely useless for precise analysis

- When tagging is done poorly (99% of the time) you can’t learn from it

the inconsistency across teams and the redundancy of the tags, rarely revisited and updated, make it almost impossible to transform qualitative data to quantitative ones, and to properly highlight trends. Due to this, the analysis performed on feedback keeps being biased and inaccurate.

A problem widespread around your organisation

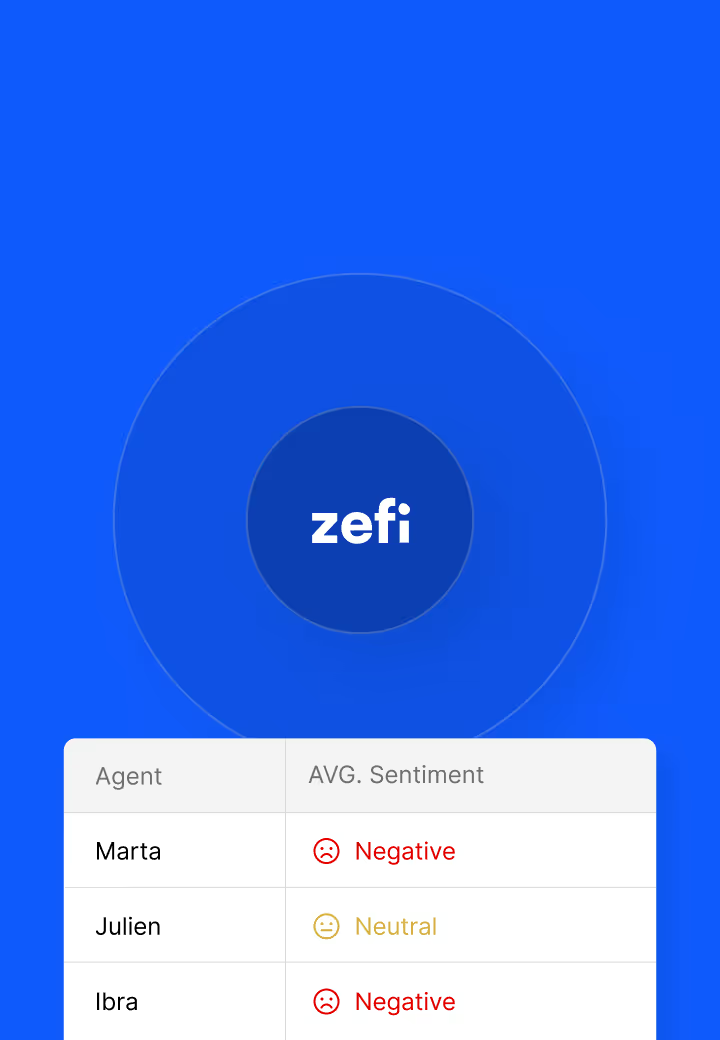

These frustrations connected to applying tags to user feedback are experienced by every team that works around the product, from Sales to Customer Success. Still, the one that is probably most affected by it is Customer Support.

The life of a customer support specialist dealing with the problem looks more or less like this:

You spent 2 days on a tough ticket, finally closing it with a happy customer who gave you a positive CSAT score. You can move on with the endless list of open tickets that await you and that you won’t probably manage to close by the end of the day. But wait! Now you have to flag this ticket with at least 2 tags for the product team so that they can learn from it and prioritize the product based on customer feedback. You wish that they could magically understand it themselves but you accept your destiny pick two seemingly relevant tags from the 700+ options, and move on to the next challenge. The list of tags you can select from became an enormous amass of redundant terms that don’t get updated from the moment it was created and it’s continuing to grow over time. You are not sure you picked the best tags to describe this ticket but you are aware that other tickets are waiting and you can’t spend all your day navigating the endless list just to make the product team (that you hate) happy, they will never be. You move on.

The consequences of all this are experienced by the Product Team, who navigate customer feedback blindly with inaccurate analysis that doesn’t let them prioritize their backlog efficiently based on what their customers want.

A better way of analyzing feedback

We believe that in 2024, with the technology capabilities that we have, people shouldn’t spend time manually tagging feedback and that product teams shouldn’t make decisions based on gut feeling.

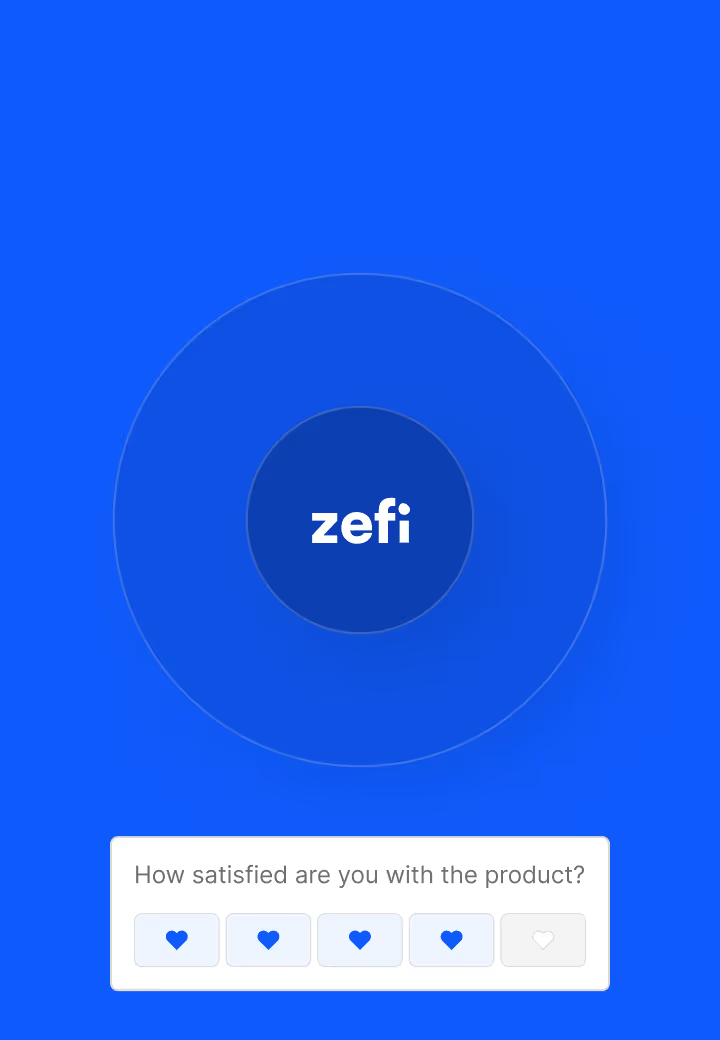

That’s why we created Zefi. To help organizations make sense of their user feedback with ease, extract actionable insight from high volumes of data scattered across multiple sources, take away manual work from different teams, and ensure that the Product department can make decisions based on unbiased analysis of what their customers want. We want companies to build better products, offer better experiences and grow developing amazing relationships with their customers.

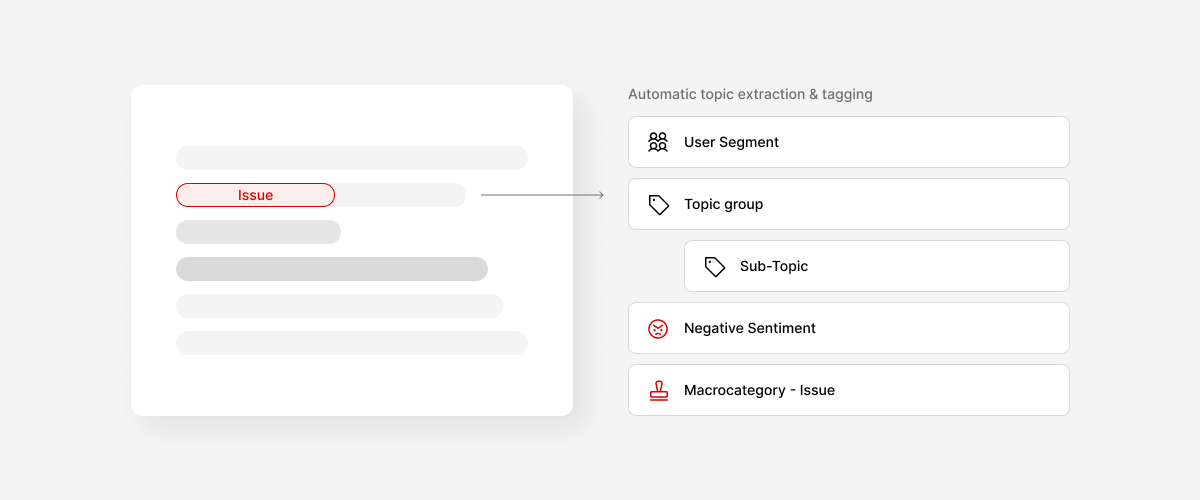

We designed Zefi auto-tagging system to ensure that:

- It is automated

You just have to create a tag and Zefi will identify the feedback that matches the context of it based on their semantic meaning and automatically flag them. You won’t need to tag feedback one by one ever again. If you want, just read a couple to make sure every tag is attributed in the correct way

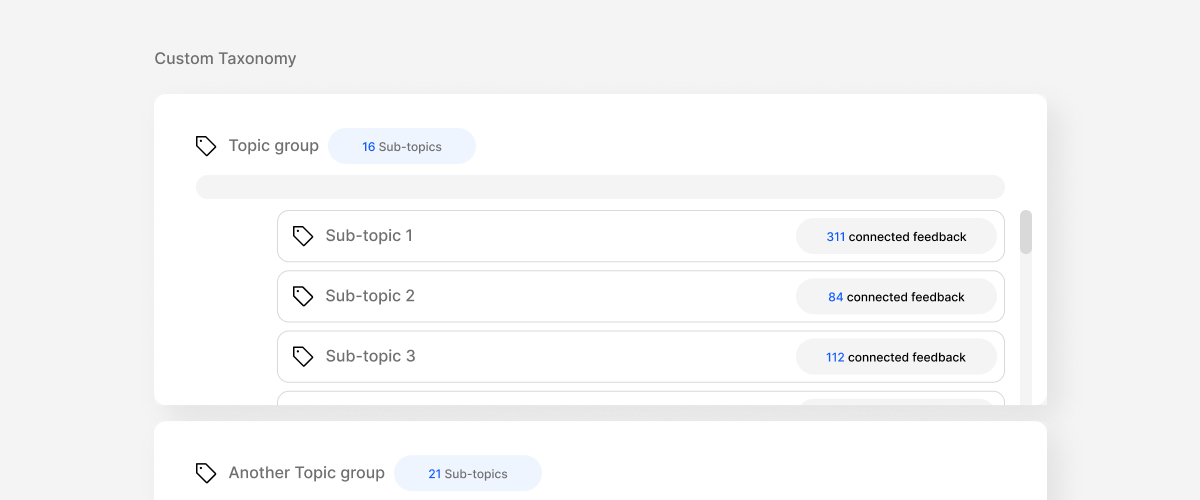

- it is tailored to your company's needs and fine-tuned to your product’s taxonomy

If you are a Saas helping other companies determine their pricing, the keyword pricing in user feedback assumes in many cases a different meaning than the one that it could have in reviews of food delivery companies. Due to a lack of context of what your company does, using off-the-shelf models like GPT4 to try to extract patterns and insights might give incorrect results.

Any Machine Learning you use to automate feedback tagging needs the context of what your company does and has to be customized and fine-tuned to your organization's taxonomy and product.

- Zefi is granular enough to give you actionable insights

Having 1,000 data points flagged as Onboarding doesn’t tell you so much. You need to go deeper and understand what exactly is the feedback and who’s asking for it. Your feedback system needs to be able to identify granular and precise reasons that originated the raw data. We give you actionable insights that can be used to prioritize more efficiently and build better products.

If you are still trying to make sense of your user feedback in a manual, biased and inefficient way, schedule a call with us. It’s time to discover a better way to do it.